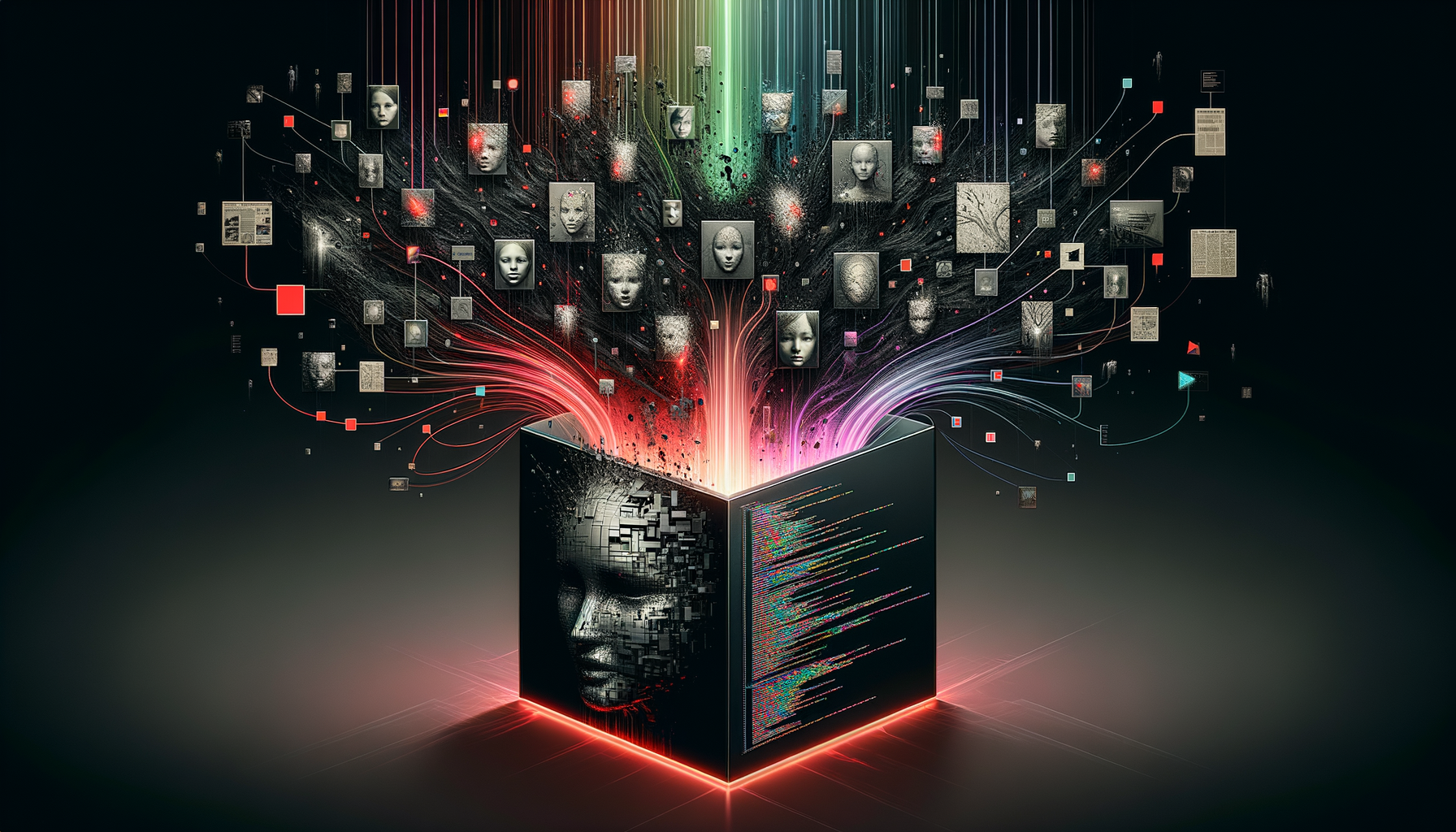

Bias in the Black Box

AI models are only as good as the data they are trained on. This post delves into how societal biases are encoded and amplified by machine learning algorithms.

Unpacking the Black Box: Confronting Bias in AI

Artificial intelligence models, despite their impressive capabilities, are fundamentally shaped by the data they consume. This often means they inherit and even amplify the very societal biases present in that data. This post delves into the critical issue of bias in machine learning algorithms, exploring how these biases become encoded and, more importantly, what proactive steps we can take to mitigate their harmful effects.

Understanding Bias in AI and Machine Learning

In the realm of Artificial Intelligence, bias refers to systematic errors within decision-making processes that lead to unfair or discriminatory outcomes [1]. These biases can stem from various sources, including skewed training data, inherent flaws in algorithmic design, or even human biases inadvertently embedded into the system [2]. It's crucial to recognise that AI systems, despite their computational prowess, are not inherently objective; they learn from the data and rules provided by humans, which can, often unintentionally, transfer existing societal prejudices.

Where Does Bias Come From? Key Sources

Bias in AI systems can manifest at several critical points throughout their development and deployment lifecycle:

-

Data Bias: This is arguably the most prevalent and impactful source. Data bias occurs when the datasets used to train machine learning models are unrepresentative, incomplete, or reflect historical prejudices, societal stereotypes, or systemic inequalities [3]. For example, if a hiring AI is trained on historical recruitment data that disproportionately favors certain demographics, it may perpetuate that bias, leading to discriminatory hiring practices [4]. Subcategories of data bias include:

- Sampling Bias: The training data fails to accurately represent the diversity of the population the AI will interact with.

- Measurement Bias: Inconsistencies or flaws in how data is collected or labeled.

- Historical Bias: Existing societal biases from the past are embedded directly into the data itself.

-

Algorithmic Bias: This type of bias can emerge from the algorithms themselves. It often arises from unintentional preferences or skewed training data [1], but can also be introduced if the algorithm's design incorporates biased assumptions or criteria, even if the data appears balanced [2].

-

User Bias: This occurs when human users of AI systems introduce their own biases, either consciously or unconsciously, through biased input, selective interpretation of outputs, or their interactions with the system [2].

The Far-Reaching Impact of Biased AI

Biased AI systems can have profound and detrimental consequences, leading to unfair treatment and discrimination across various critical sectors:

- Hiring and Employment: AI-powered recruitment tools might inadvertently screen out qualified candidates based on protected characteristics like gender or race.

- Lending and Finance: Loan approval algorithms could disproportionately deny credit to specific demographic groups.

- Criminal Justice: Predictive policing tools may lead to the over-policing of minority communities, while risk assessment tools can unfairly influence sentencing decisions.

- Healthcare: Diagnostic AI systems, if trained on unrepresentative patient data, might perform poorly for certain demographics, potentially leading to misdiagnosis or inadequate treatment.

Beyond direct discrimination, biased AI can reinforce harmful stereotypes and disproportionately affect marginalised communities, thereby exacerbating existing societal inequalities [2].

Ethical Imperatives and Mitigation Strategies

Addressing bias in AI is not merely a technical challenge; it is a fundamental ethical imperative. Key ethical considerations guiding AI research and development include:

- Fairness: A cornerstone principle aiming to eliminate biases and promote equitable treatment, ensuring algorithms do not discriminate based on protected characteristics [1].

- Transparency and Explainability: Many AI systems operate as "black boxes," making their decision-making processes opaque [5]. Ethical AI advocates for transparency, allowing users to understand how AI systems function and how their data is utilised. Explainable AI (XAI) is crucial for fostering accountability and building trust [6].

- Privacy: Safeguarding user data is paramount, especially given that AI systems often handle sensitive information [7].

- Accountability: Organisations must assume full ownership of the actions and outcomes generated by their AI systems [8].

- Human Oversight: Continuous human oversight is indispensable, as AI systems are not "set it and forget it" tools [9].

Researchers and data scientists are actively developing and implementing techniques to identify and mitigate biases in machine learning models. These proactive strategies include:

- Diverse and Representative Data Collection: Ensuring training data is diverse and accurately represents all relevant populations is crucial [10]. This may involve oversampling underrepresented groups or generating synthetic data to achieve balanced datasets.

- Fairness-Aware Algorithms: Developing algorithms that explicitly incorporate fairness constraints during the training process to ensure unbiased models. Techniques such as adversarial training, reweighing, and re-sampling can effectively reduce algorithmic bias [10].

- Bias Detection and Evaluation: Employing analytical techniques like disparate impact analysis to measure differences in model outcomes across various groups, thereby identifying potential biases [10].

- Continuous Monitoring and Review: AI models require ongoing monitoring and review in operational environments, as their performance and potential biases can evolve over time [11].

- Interdisciplinary Collaboration: Effectively addressing bias in AI necessitates collaboration among a wide array of stakeholders, including governments, businesses, academia, and civil society, to develop comprehensive and sustainable solutions [12].

The ethical responsibility to address bias and ensure fairness extends far beyond the technical realm. It demands a multi-faceted approach that critically examines data, algorithms, and the human decision-making processes that underpin AI development [1].

References

- [1] IBM. (n.d.). What is AI ethics?. Retrieved from https://www.ibm.com/topics/ai-ethics

- [2] MDPI. (n.d.). Bias in Artificial Intelligence. Retrieved from https://www.mdpi.com/journal/futureinternet/special_issues/Bias_AI

- [3] Vation Ventures. (n.d.). AI Bias: What it is, Types, Examples, and How to Prevent it. Retrieved from https://www.vationventures.com/blog/ai-bias

- [4] CloudThat. (n.d.). Understanding and Mitigating Bias in AI. Retrieved from https://www.cloudthat.com/resources/blog/understanding-and-mitigating-bias-in-ai/

- [5] Exasol. (n.d.). AI Bias: What it is, Types, Examples, and How to Prevent it. Retrieved from https://www.exasol.com/blog/ai-bias/

- [6] AI Multiple. (n.d.). AI Bias: Types, Examples, & How to Prevent it. Retrieved from https://aimultiple.com/ai-bias

- [7] TechTarget. (n.d.). What is AI ethics?. Retrieved from https://www.techtarget.com/searchenterpriseai/definition/AI-ethics

- [8] ResearchGate. (n.d.). Bias in Artificial Intelligence. Retrieved from https://www.researchgate.net/publication/350000000_Bias_in_Artificial_Intelligence

- [9] NIH. (n.d.). Ethical AI. Retrieved from https://www.nih.gov/research-training/medical-research-initiatives/big-data-science/ethical-ai

- [10] Encord. (n.d.). How to Mitigate AI Bias. Retrieved from https://encord.com/blog/how-to-mitigate-ai-bias/

- [11] PMI. (n.d.). Ethical AI: A Guide to Responsible AI Development. Retrieved from https://www.pmi.org/learning/library/ethical-ai-responsible-development-12987

- [12] GeeksforGeeks. (n.d.). Bias in AI. Retrieved from https://www.geeksforgeeks.org/bias-in-ai/

Written by

Ben Colwell

As a Senior Data Analyst / Technical Lead, I’m expanding into AI engineering with a strong commitment to responsible AI practices that drive both innovation and trust.

Related Posts

August 6, 2024

AI Governance and the Future of Humanity

Who controls the development and deployment of powerful AI? A look at the current state of AI governance and the critical need for global cooperation.

July 17, 2024

The Ethics of Artificial Sentience

If we create a truly sentient AI, what are our moral obligations to it? We explore the thorny ethical landscape of machine rights and synthetic suffering.