AI Governance and the Future of Humanity

Who controls the development and deployment of powerful AI? A look at the current state of AI governance and the critical need for global cooperation.

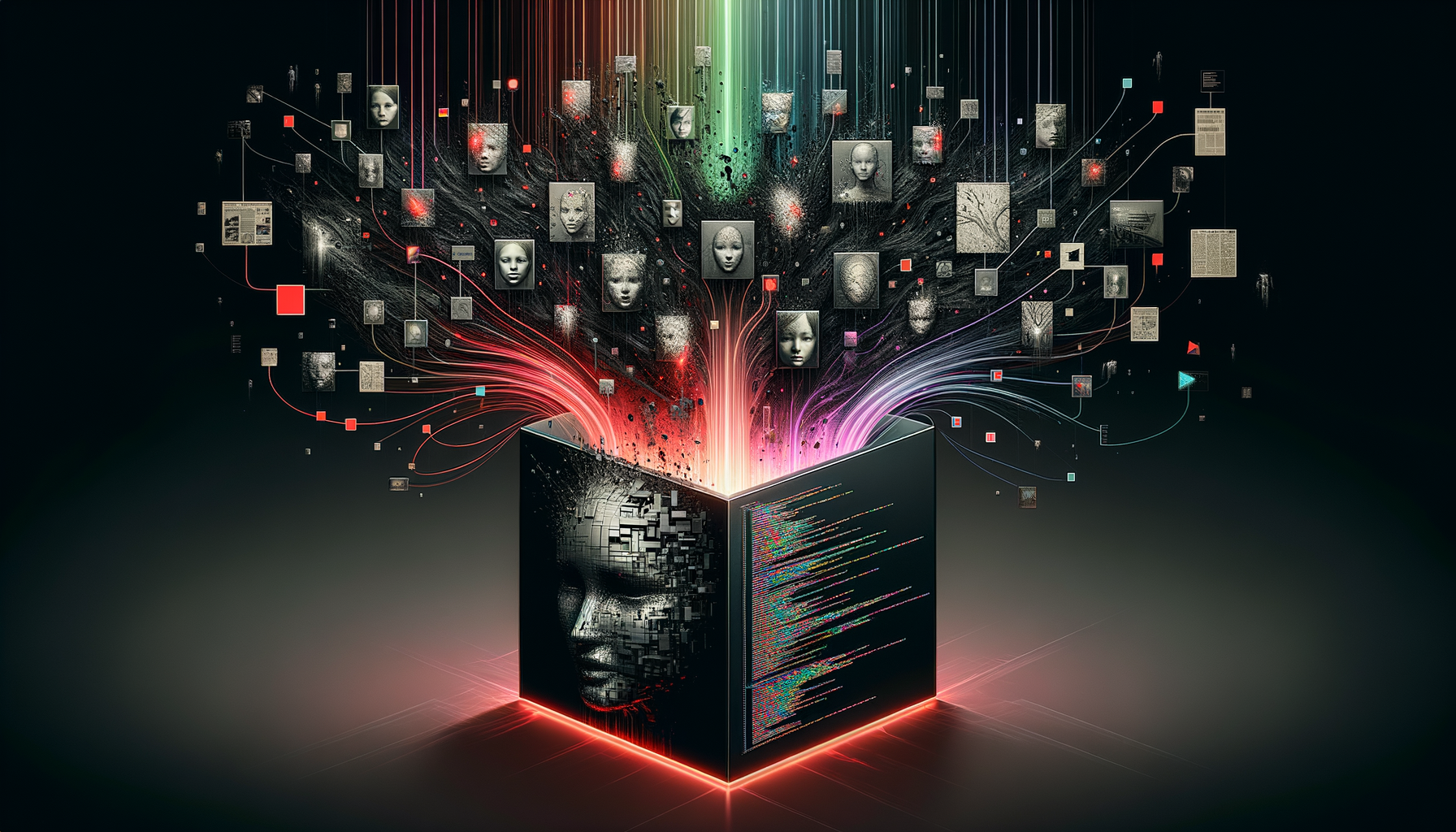

Navigating the Future: AI Governance and the Human Imperative

As artificial intelligence rapidly reshapes our world, a fundamental question emerges: who guides its immense power? This post delves into the critical need for robust governance frameworks, exploring how we can ensure AI's development aligns with human values and safeguards our collective future.

The Imperative of AI Governance

With AI systems becoming increasingly sophisticated and deeply integrated into every facet of society, the question of their governance has become paramount. AI governance is the intricate process of translating abstract ethical principles into tangible frameworks, actionable policies, and enforceable regulations. It addresses vital inquiries such as: Who is accountable for AI's decisions? Which aspects of AI are subject to oversight? At what stages of the development lifecycle should governance intervene? And, crucially, how can these mechanisms be effectively implemented?

Academic research in AI governance often synthesises existing literature, meticulously outlines key research themes, and pinpoints critical knowledge gaps. It also scrutinises the roles of diverse stakeholders—including AI developers, industry leaders, academic institutions, governmental bodies, and civil society organisations—in shaping these crucial governance structures. Key areas of focus include developing transparent explainability standards, ensuring algorithmic fairness, meticulously considering safety implications, fostering seamless human-AI collaboration, and establishing clear, enforceable liability frameworks.

There is a growing, undeniable emphasis on global AI governance, a recognition that AI's profound impact transcends national borders. This necessitates a delicate balance between collective responsibility and targeted accountability, especially as AI systems grow more autonomous and influential.

Ethical Foundations: Guiding AI Development

AI ethics explores the moral principles, guiding rules, and regulatory guidelines that should underpin the entire lifecycle of AI development and deployment. It also delves into the complex challenge of designing AI systems that inherently behave ethically. Academic discussions in this burgeoning field frequently tackle challenges such as ensuring transparency, achieving explainability, protecting privacy, upholding justice, promoting fairness, and understanding the broader societal impact of AI technologies.

A central and increasingly vital concept is "ethics by design," which champions the integration of ethical considerations from the very inception of AI development. Much like "privacy by design," this proactive approach aims to embed ethical values directly into the AI's architecture and decision-making processes, rather than attempting to retrofit them as an afterthought. This ensures that ethical considerations are not merely add-ons but fundamental components of the AI system.

Distinctions are often drawn between the "Ethics of AI" (the overarching ethical principles guiding AI development) and "Ethical AI" (AI systems that perform and behave in an ethically sound manner). Both dimensions are indispensable for ensuring that AI serves humanity in a profoundly positive way.

Safeguarding the Future: The Pursuit of AI Safety

AI safety research is a dedicated discipline focused on identifying and rigorously mitigating risks associated with advanced AI systems. These risks span a wide spectrum, from immediate, practical concerns—such as algorithmic biases or system failures—to the profound, potentially existential threats posed by highly intelligent and autonomous AI.

This critical field encompasses work on adversarial robustness (making AI resilient to malicious attacks), interpretability (understanding how AI arrives at its decisions), and developing robust technical benchmarks for assessing dangerous capabilities in AI systems. Key challenges in AI safety include the alignment problem (ensuring AI goals are perfectly aligned with human values), preventing catastrophic AI risks, and managing potential harms from increasingly agentic algorithmic systems.

Many researchers underscore the necessity of an epistemically inclusive and pluralistic approach to AI safety. Such an approach can accommodate the full spectrum of safety considerations and motivations from diverse perspectives. Influential works in this area, such as "Concrete Problems in AI Safety" by Amodei et al. (2016), not only highlight anticipated problems but also propose concrete technical research directions to address them proactively.

The Interconnected Future: A Holistic Approach

AI governance, ethics, and safety are not isolated disciplines; they are deeply and inextricably interconnected. Ethical principles serve as the foundational bedrock, often informing the development of comprehensive governance frameworks. Simultaneously, rigorous safety research contributes directly to the practical implementation of ethical and responsible AI systems. As AI continues its relentless march forward, a holistic approach that seamlessly integrates these three pillars will be absolutely essential for navigating the complex challenges and, more importantly, for harnessing the immense potential of artificial intelligence for the enduring benefit of all humanity.

References

- Amodei, D., Olah, C., Steinhardt, J., Christiano, P., Schulman, J., & Mané, D. (2016). Concrete Problems in AI Safety. arXiv preprint arXiv:1606.06565.

- Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature Machine Intelligence, 1(9), 389-399.

- Floridi, L., & Cowls, J. (2019). A unified framework of five principles for AI in society. Harvard Data Science Review, 1(1).

- Russell, S., & Norvig, P. (2010). Artificial Intelligence: A Modern Approach (3rd ed.). Prentice Hall.

- Dafoe, A. (2018). AI governance: A research agenda. Governance of AI Program, Future of Humanity Institute, University of Oxford.

Written by

Ben Colwell

As a Senior Data Analyst / Technical Lead, I’m expanding into AI engineering with a strong commitment to responsible AI practices that drive both innovation and trust.

Related Posts

July 17, 2024

The Ethics of Artificial Sentience

If we create a truly sentient AI, what are our moral obligations to it? We explore the thorny ethical landscape of machine rights and synthetic suffering.

June 25, 2024

Bias in the Black Box

AI models are only as good as the data they are trained on. This post delves into how societal biases are encoded and amplified by machine learning algorithms.